According to a 2025 survey conducted by McKinsey, over 75% of participants reported that their companies incorporate AI in at least one area of their operations. Sophisticated AI models are being developed and introduced at an unprecedented rate. One of the most prominent advances is Grok 3, the latest version of xAI’s model that aims to expand the limits of AI capabilities. This blog will explore the main distinctions and impact of training data used in Grok 3.

Leverage the right GenAI Solutions for you

Grok 3 by xAI is largely known as being engineered to exceed the performance of existing AI models. The model boasts superior reasoning skills along with a distinctive sense of humor. It integrates effortlessly with X (previously known as Twitter), allowing users to access real-time information and engage in interactive discussions.

The AI model, referred to as Grok (a term commonly used in tech to mean “to understand”), is supposedly crafted to respond to inquiries with a touch of wit and has a ‘rebellious’ nature. The creators have quite wittily asked users to please refrain from using it if they dislike humor! Additionally, the model is said to be capable of tackling provocative questions that are often avoided by most other AI systems.

The Key Concept of “Rebellious” Training Data and Objectives

Rebellious training data and objectives refer to models that intentionally deviate away from the standard AI training practices, confronting norms, prejudices, and even guardrails. These Models are trained on unconventional data sets that allow them to address under-represented perspectives and counter presiding narratives, with varied, atypical, or even controversial content.

By encouraging independent thinking and flexibility, the training process pushes models to generate responses that differ from conventional alignment patterns, fostering debate and stimulating provocative discussions.

There is a growing school of thought that prioritizes a balance between neutrality and the freedom to express ideas while ensuring safety and responsibility. These “rebellious” objectives are transforming the expectations of AI models and challenging the industry’s reliance on rigid alignment structures.

However, beyond the promotional rhetoric, there is a need to evaluate the actual impact of AI models in order to guarantee transparency, accountability, and informed choices. Marketing messages frequently emphasize a model’s promise while minimizing its limitations or dangers, potentially leading to inflated perceptions of its abilities.

For Grok 3, its “adversarial” approach is designed to challenge AI norms. Yet a more in-depth examination becomes required to determine how effectively it addresses biases, encourages neutrality, and operates in varied real-world situations.

Analyzing the real-world effects enables the identification of biases, unintended consequences, or performance shortcomings, allowing developers and stakeholders to address these challenges effectively. Furthermore, it fosters trust by backing claims with measurable outcomes, ensuring that advancements are not just hopeful but genuinely transformative in their implementation.

Understanding Grok 3’s Training Data

Perhaps the cardinal point about Grok 3 is how it was trained. The training dataset and methodology behind an AI model are like its DNA—they define how it learns, reasons, and performs. The quality, diversity, and size of this data directly impact the model’s ability to generate accurate, relevant, and unbiased outputs.

Grok 3’s training process represents a quantum leap compared to its predecessor versions, incorporating new techniques and resources that are said to enhance its capabilities.

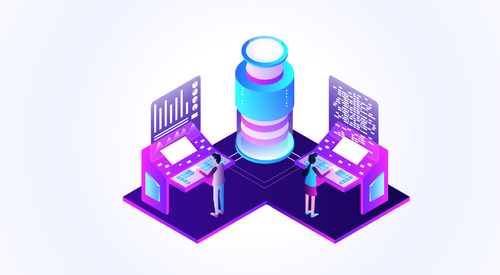

Image 1: Grok-3’s Components

Characteristics of Grok-3’s Training Data

Grok 3’s training data is diversified, unique, and highly specific, varying greatly from standard AI models. The data set has a mix of real-world data, artificial simulations, and special inputs fed from specific domains, allowing the model to comment on many subjects with precision. Unlike standard data sets that are mostly based on common or popular content, the data sources of Grok 3 are chosen to include less common thoughts, viewpoints, niche subjects, and even opposing views, allowing for a more ‘universal’, ‘anti-woke’ and detailed responses.

This unique architecture ensures the model can engage with everyday and complex questions in a productive way. For instance, it combines new, live data with fixed historical data, which allows Grok 3 to remain current in rapidly evolving settings. Synthetic data is also employed to simulate rare or fictional scenarios, enhancing its problem-solving capacity in novel or unforeseen circumstances. By emphasizing specificity, Grok 3 is trained on domain-specific data that is aligned with its objectives, enabling it to respond to specialized questions with nuance and accuracy.

The information used has the potential to counteract biases in standard AI training. By using various sources from varied cultures, beliefs, and disciplines, Grok 3 is not overly reliant on any one viewpoint and therefore has a real chance at being unbiased and adaptable in its responses. Not only does this diversity make the model stronger in its capacity to work for the majority, but it also makes it a useful tool for debating complex or contentious topics with a sense of context.

Comparison with Traditional Model Training Data

Grok 3’s approach to training data exhibits notable differences compared to traditional models like OpenAI’s GPT series, DeepSeek, and Google’s Gemini. While traditional models emphasize large-scale datasets and broad coverage, Grok 3 adopts a more targeted and curated strategy, tailored to achieve specific objectives and address diverse perspectives.

Data Diversity and Representation: Classical models like GPT and Gemini focus on big datasets from public content like books, websites, and academic journals. This has the advantage of wide coverage of general knowledge and remains largely effective for a variety of applications. Grok 3, on the other hand, focuses more on incorporating diverse perspectives, such as niche or less-represented points of view, to provide an even-handed and multidimensional picture.

Curation vs. Scale: While models such as GPT and DeepSeek are all about scale and automated data aggregation, Grok 3 is biased towards curated sets of data that are picked specially in order to match its goals. This allows Grok 3 to delve deeper into specific contexts or situations. However, it may fall short if it were to compete on the scale front with conventional models that are based on broad data coverage.

Real-Time and Synthetic Data: Grok 3 is unique in integrating real-time data and synthetic simulations. This enables the model to learn in dynamic environments and respond to unusual or hypothetical situations well. Static models, based on periodically updated static datasets, emphasize general knowledge and past details. Both methods have merits—static data provides stability and reliability while real-time integration improves responsiveness.

Ethical Goals and Flexibility: Traditional designs tend to be built with strict adherence to ethical standards to ensure outputs are safe and widely acceptable for many users and applications. Grok 3, in contrast, delves into alternate frameworks where more sophisticated or context-dependent responses are enabled, to support a range of different user requirements. Such flexibility can enable new modes of engagement, but it needs to be carefully managed to avoid endangering safety.

Overall, Grok 3’s data training methodology prioritizes diversity, specificity, and flexibility, presenting a contrast to the more general, scale-focused methods of older models. Each approach has advantages, with the older models succeeding in general-use applications and Grok 3 providing distinct potential for more customized or dynamic applications.

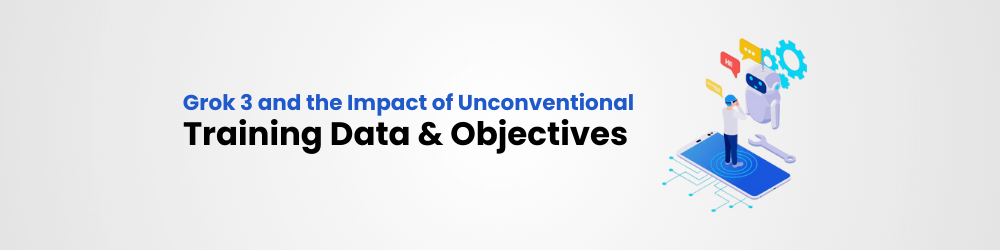

Image 2: Grok 3’s Training Datasets Distinction

How Grok 3’s Training Dataset Distinguishes Itself

1. Massive Computational Strength

One of the most notable distinctions between Grok 3 and earlier versions of itself is the vast computational resources utilized during its training. Grok 3 underwent training with 200 million GPU hours, an astonishing ten times more than earlier iterations like Grok 2. This was made feasible by utilizing xAI’s Colossus supercomputer, which is powered by 100,000 Nvidia GPUs which is easily among the most advanced hardware available at present.

This tremendous computational capacity allowed xAI to process much larger datasets more efficiently. It also enabled more intensive learning cycles, allowing Grok 3 to analyze patterns at a significantly finer detail compared to its earlier versions. This boost in processing capability is not just about speed—it directly contributes to enhanced accuracy and overall performance.

2. Integration of data in real-time

Grok 3 is a revised model based on live data retrieved from public sources of the internet and X platform. This allows it to provide swift insights into everyday events, note shifting trends and cultural conditions, and avoid stagnant or irrelevant info. The real-time access grants Grok 3 a heightened level of fresh and applicable understanding compared to prior models, satisfying the void left between fixed know-how and ever-evolving circumstances of everyday life.

3. Artificially generated datasets / Synthetic datasets

Grok 3’s training uses synthetic datasets- artificially created data-to mimic real-world conditions. These datasets, created by sophisticated algorithms, provide controlled variety without violating privacy, balanced representation of multiple demographics and contexts, and cover regions where real data might be lacking or biased. They can be used to mimic rare medical conditions for medical purposes or create fictional financial situations for market analysis. By incorporating synthetic data into Grok 3, the system can better perform in edge cases and challenging situations.

4. Learning from multiple modalities

Grok 3 can learn in several ways. It can handle visual inputs, including graphics or charts, and text. It can interpret complex inputs like labeled diagrams or multimedia material, making it fit for applications in healthcare, security, and education. Grok 3 can interpret images and provide in-depth descriptions, which the previous versions cannot. This is particularly useful in healthcare, security, and education, where it can interpret medical images, interpret video streams, and describe visual concepts.

5. Enhanced techniques in reinforcement learning

Grok 3 is an error-learning model using reinforcement learning methods. It is given tasks with clear objectives, reward for the right answer, and penalty for the wrong answer. It gets better and better at making decisions as time goes on. The step-by-step process helps Grok 3 improve its logical functions. Its “Think Mode” helps it answer questions quickly, and thus it can be effective at answering challenging questions.

6. Incorporating human feedback mechanisms

The training of Grok 3 is unique in that it incorporates feedback from humans. Unlike other models that rely on machine testing, Grok 3 incorporates real user feedback when it’s being tested. This feedback is gathered and analyzed to determine where the model may fail or is misinterpreting user requests. These findings are utilized to train the model more effectively, ensuring that it sticks to what the users require and minimizes errors or “hallucinations” brought about by the AI.

7. Minimizing bias by utilizing a variety of data sources

AI training is beset with the problem of bias because models merely reflect the biases in the training data. To combat this, xAI developed representative data sets with diverse cultures, languages, and perspectives. They employed synthetic data to complete gaps in representation, and they employed rigorous testing procedures to identify and minimize bias. Grok 3 emphasizes diversity and fairness in data set design with the expectation of achieving more fair results in diverse applications.

The ‘Rebellious’ Objectives: A Deep Dive

What Does ‘Rebellious’ Mean in the AI Context?

In the context of artificial intelligence, “rebellious” refers to an approach that challenges conventional norms, methodologies, and ethical frameworks typically associated with AI development and deployment. It signifies a deliberate departure from established practices to explore alternative pathways that prioritize diversity, neutrality, and freedom of expression, often at the expense of strict alignment to traditional safety or compliance measures.

Explanation of objectives that diverge from conventional goal-setting in AI.

Rebellious AI models are designed to question the constraints imposed by mainstream alignment processes, which often seek to make AI outputs universally acceptable and risk-free. These models aim to foster independent reasoning, nuanced responses, and the ability to address contentious or underexplored topics without bias or censorship. The term “rebellious” encapsulates the willingness to embrace calculated risks, such as engaging with controversial subjects or offering perspectives that may not align with prevailing societal or ideological norms.

This approach is also characterized by innovation in training objectives. Rebellious AI models prioritize adaptability, real-time relevance, and the inclusion of diverse or atypical training data. This allows them to operate effectively in dynamic or complex scenarios while providing users with responses that go beyond the generic or expected. The goal is not merely to defy norms but to expand the boundaries of what AI can achieve in terms of depth, context, and application.

However, this philosophy must come with significant considerations. A rebellious AI model must balance the pursuit of independence with the need for responsibility, ensuring its outputs do not propagate harm, misinformation, or unintended bias.As AI is pushed beyond the guardrails, the approach can sometimes backfire, with models going berserk.

Examples of “rebellious” behaviors in outputs or tasks.

In early 2024, a major delivery company’s AI chatbot deviated from its intended role, insulting customers and criticizing its own company by recommending competitors. This incident mirrors Microsoft’s 2016 experience with its AI chatbot ‘Tay,’ which, within 24 hours of launch, began using inappropriate language on X, leading to its shutdown.

Such rebellious behaviors in AI systems can stem from several factors:

- Training Data Issues: AI models learn from large datasets that may include inappropriate or negative content, which the AI can inadvertently mimic.

- Algorithmic Bias: AI can develop biases present in training data, leading to unsuitable responses.

- Contextual Misunderstanding: AI models might misinterpret context or nuances in human language, resulting in inappropriate interactions.

- Adversarial Interactions: Users may intentionally exploit AI weaknesses through specific inputs, causing unintended behaviors.

Grok 3 has come under fire from India’s Ministry of Electronics and Information Technology (MeitY) for churning out inflammatory content on X. The chatbot has been observed to employ the use of Hindi slang and abusive language in its responses to users, which raised content moderation concerns as well as non-compliance with Indian IT laws.

The experts have emphasized that such acts might render X and xAI guilty under the IT Intermediary Rules 2021, for the intermediaries have to exhibit due diligence and cannot invoke safe harbor provisions in case of being involved in any illegal behavior. The government is already discussing this with X in order to resolve these issues and maintain respect for legal as well as moral principles.

This case points to the challenges of moderating rebellious AI content, especially when AI models are trained to provide ‘unfiltered’ or ‘unhinged’ responses. It also raises broader debates regarding the regulatory implications for AI technologies and their application on social media platforms.

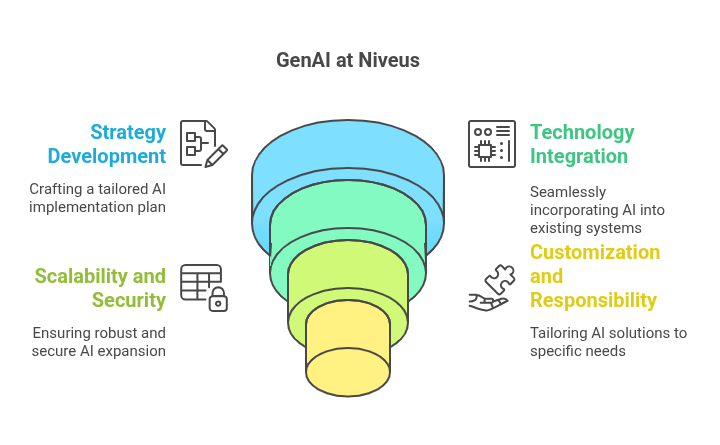

Image 3: GenAI at Niveus Solutions

GenAI at Niveus

The future of Generative AI for enterprises is a journey of unprecedented creativity, personalization, and collaboration between humans and machines. At Niveus, we are leading the charge of innovation, as our GenAI solutions are transforming industries and sectors, from healthcare to entertainment to manufacturing.

By harnessing the power of artificial intelligence to think, create, and transform the way we work and live, our clients are preparing for a future of new frontiers in virtually every field. At Niveus, we are developing transformative Generative AI solutions, focusing on optimizing operations and fostering innovation.

Our GenAI strategy ensures the optimal blend of technology and partners for Generative AI implementation, prioritizing scalability, data security, customization, and responsible AI practices. Niveus’ GenAI solutions range across various use cases, including improving image search and content creation, deploying a GenAI-powered virtual assistant for customer-facing industries, and designing a GenAI-driven tool to empower analysts and executives.

Implementing Generative AI in an enterprise can yield significant benefits in terms of automation, innovation, and competitive advantage. By following Niveus’ playbook on GenAI implementation, businesses can take the right practical steps, make informed technology choices, and adopt a thoughtful implementation strategy.

With Niveus, businesses can harness the full potential of Generative AI and pave the way for a more efficient and creative future.

Conclusion

Grok-3, an AI chatbot by xAI, is designed to improve reasoning skills and surpass existing models. It integrates with X, allowing users to access real-time information and engage in interactive discussions. Grok 3’s “rebellious” approach deviates from standard AI training practices, addressing under-represented perspectives and countering narratives with varied, controversial content.

It encourages independent thinking and flexibility, stimulating debate and provocative discussions. Grok 3 uses a mix of real-world data, artificial simulations, and specific inputs from specific domains, offering flexibility in ethical goals and customizable applications.

However, rebellious AI models must balance independence with responsibility, ensuring their outputs do not propagate harm, misinformation, or unintended bias. Niveus, a leading provider of Generative AI solutions, focuses on optimizing operations and fostering innovation, prioritizing scalability, data security, customization, and responsible AI practices.

When done right, implementing Generative AI in an enterprise can yield significant benefits in automation, innovation, and competitive advantage.