In the earlier part 1 of this blog, we explored the rapid growth of generative AI, and an introduction to multimodal AI, its potential for transformation, and how it works. For part 2, we will go deeper into how these AI models and agents are reshaping real-world healthcare applications, tackling challenges, and driving innovation.

Empower Healthcare Innovation with AI

Prompt Design

Regardless of the purpose of the model, the user starts with the prompt, as over the recent days the GPT-4o model has been responding to the following prompt by millions of users saying “Convert the following image to Studio Ghibli style art,” which has led to a spike in traffic on the backend.

So, what is a prompt here?

A prompt is a natural language request submitted to the model to receive a desired response. The response of the model is dependent on the input that has been provided by the user. A good prompt will always consist of the following parameters to receive more accurate responses.

- Input – Simply, a request to the model. It can be a question input, a task input that the model has to work on, or a generation input so the model can create something based on the request.

- Context – An optional parameter given to the model includes certain steps to guide the model to respond. These can also be given as the referential points that the model can consider.

- Examples – A few sets of input and output combinations are given to the model along with the input to get the desired output.

We can further classify the prompt into 3 categories, i.e., zero-shot, one-shot, and few-shot prompting.

Zero-shot prompting: If the user has provided the input to the model, which is expected to do a certain action, but without any examples is called zero-shot prompting. If we ask the model, “What is zero-shot prompting?” This is itself an example of a “free prompt” or “zero-shot prompting”.

One-shot prompting: This is the method where the LLM is provided with the prompt to do the action by providing a single example.

If we ask the LLM, “What is the colour of the sky?” the response would be “Blue.” However, if we provide an additional example input, such as “What is the colour of the grass?” with the output “The colour of the grass is {Green},” the model understands the desired output format. As a result, when asked “What is the colour of the sky?” it would respond with “The colour of the sky is blue,” rather than just “blue.”

Few-shot prompting: This is the method where the model is provided with a small set of examples to perform a certain action. Now this is how the good design of prompting has to be by providing more context and precise prompts.

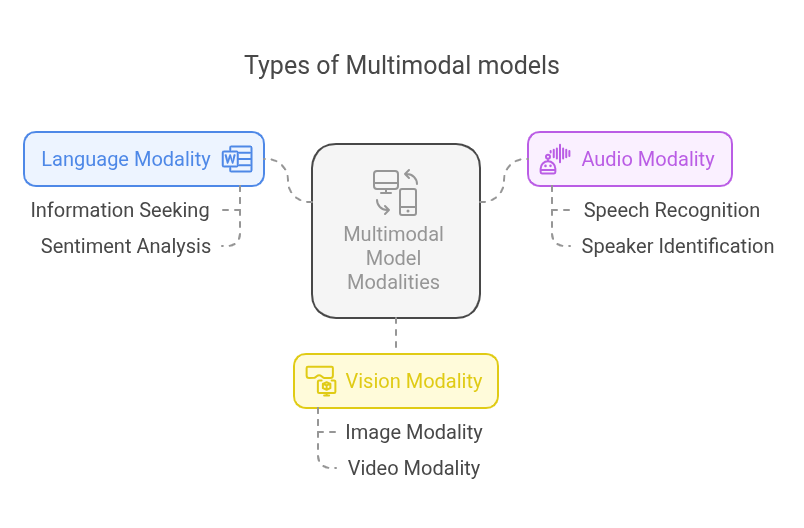

Image 1: Types of Multimodal AI

Types of Multimodal AI Models

- Language Modality – Widely used modality in multimodal models, which includes processing textual and structured data that can have either user prompts or reference to certain documents containing text, mostly having natural language requests. The applications might involve information seeking, information extraction, classification, and sentiment analysis.

- Audio Modality – one of the commonly used modalities is considered audio modality, which requires processing audio data such as speech or tone. This type of processing and models are important due to their capability of speech recognition and understanding the audio channels. Applications of these involve speech event detection, customer support & virtual assistants, music tone and genre classification, speech-to-text – extract information from speech data, and identify and classify the speaker.

- Vision Modality – which is further classified into 2 types:

- Image Modality – This involves processing the image data, which mainly classifies the image pixels. The main applications of image modality could be object detection from an image, entity recognition and extraction, image processing and classification, facial recognition, and content creation.

- Video Modality – This is one step beyond the vision modality, and involves processing video data, graphics, motion, and pixel movement. This helps in detecting the dynamic movement of objects and motion. The most common applications are video recognition and extraction, caption generation, and content summarization widely used in the media industry. His further can be combined with other modalities to have a better LLM in place, so will understand the input data better to have advanced applications which involve emotion classification from video and speech, and video generation.

Domain-specific use case

If we look into the benefits of multi-modality in AI solutions, we have Vertical solutions and Horizontal Solutions.

Vertical Solutions: These are more industry-specific solutions that can solve more complex problems very particular to one industry. For example, in the Healthcare industry, AI can be leveraged to generate healthcare data insights, and thus help by providing services across patients, doctors, and hospitals.

Horizontal Solutions: In contrast to Vertical, these AI solutions can be leveraged to solve similar problems across different industries. For example, a Customer service chatbot can be helpful to assist humans and to respond to user queries across any domains or sectors.

These combined solutions were considered as general-purpose solutions and now have a greater impact on many industries since the solution impact is impressive. Some of the highly impacted industries are Healthcare, Education, Manufacturing & Finance.

A look at how AI can Change the Healthcare Industry

AI in healthcare is seeking substantial growth, and the market value is predicted to be around $164.16 billion by 2030. The indulgence of AI in healthcare is impactful and foresees significant changes in the operations of hospitals and clinics. The technology is drastically changing and keeps evolving due to its iterative process, hence the outcomes generated by it could be treated to have more accurate diagnoses and personalized treatments, thus we can have the system architecture called AI-driven personalized treatment.

These LLM models can learn the medical data instantly, which enables early detection of diseases and provides critical insights. This technology change not only handles the patients’ treatments but also streamlines healthcare operations more efficiently. Thus, by leveraging AI solutions, healthcare systems & Services can become smarter and faster than the current healthcare techniques.

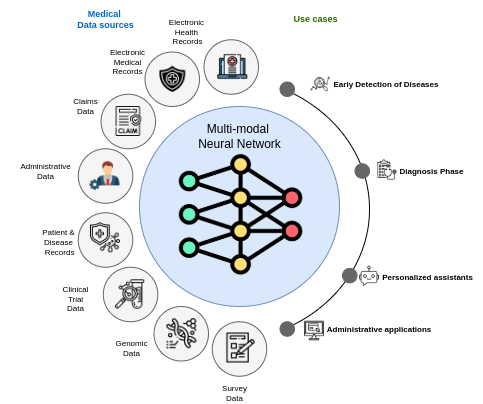

The capabilities of multimodal AI to handle the variants in the data type and to merge complex domain knowledge across the industry and multiple data types. These AI models can be served with many data sources across the industry.

Now let’s consider the data sources with a few use cases:

Image 2: Multimodal AI in the healthcare industry with use cases

- Early Detection of Diseases: Since multimodal AI is known for its ability to merge different data types and formats, data from various sources(wearable devices and health records) can be combined and used for early detection of diseases & proactive measures. Some of the critical diseases, such as cancer treatment, depend on early detection and prevention. AI can scan the patient’s health records, biopsy images, and MRI scans much faster than doctors, leading to reduced delays in diagnosis and treatment.

- Diagnosis or treatment phase:

- Clinical Diagnosis – When considering the broader scope of the problem in hospitals, AI can assist in completing diagnoses much faster than doctors. Additionally, AI can analyze health records and patient data to detect diseases based on image data from test results. AI has also made significant contributions to, and will continue to impact, the arduous task of drug development, employing data-driven techniques through deep learning. Over the recent years, DeepMind’s AI has detected over 50 eye tests as accurate as doctors. The algorithms used in the models can diagnose the patient’s disease with an accuracy of 87%. This adoption of AI in healthcare has emerged very well and has been fast-tracking the healthcare operations.

- Personalized assistants: AI has now integrated with the IOT, which will be used as wearable devices to monitor health, while integrating AI into these devices can be a personalized assistance, improving the monitoring and tracking of health fluctuations or issues at the early stage to prevent them initially. They will capture and collate the health data all the time, and provide the best personalized recommendations and prevent any irregularities.

- Robot Doctors: In the future, the multimodal AI’s capabilities will transform into a more advanced manner where AI might take over the complete role of Doctors to call them as “Robot Doctors,” where rather than assisting in the diagnosis, it will perform complete diagnosis, which is very crucial. According to life science data, in 2017, the first-ever semi-automated surgical robot was invented, which has sutured blood vessels as narrow as 0.03 mm. These surgical robots can be well-trained and be the best at their task as good as surgeons.

- Administrative applications: Integrating AI into healthcare operations has resolved many administrative challenges that previously required manual effort, such as claims processing, appointment scheduling, billing entry, and invoice generation. By automating these tasks, hospital management can shift its focus to more critical areas, including patient care and surgical advancements.

Challenges of Multimodal AI

Even though these AI models can be considered as impactful over the traditional solutions and despite rapid development, these are having certain challenges when it comes to large-scale deployment.

- Representation: The multimodal AI requires a good amount of data to learn and needs a huge amount of a labeled dataset with multiple modalities.

- Alignment: the model aims to build the connections among different modalities, where there will be an expectation to have the data fusion to identify the correlation among diverse data types. Most of the time, it is challenging to have this alignment according to the needs at a particular time and space.

- Modal Translations: A typical challenge occurs when the use case is to convert to a different modality or one language to another, also called multimodal translation. A basic example of this is when the user asks the model to generate an image based on the text prompt.

- Privacy concerns: AI has been trained with a massive amount of data, which might sometimes have access to the user’s personal information. This will lead to the violation of privacy concerns concerning the PII Data, access to speech, images, data, and many more, which are very sensitive.

- Evaluation Metrics: Real needs are to improve and to implement advanced evaluation metrics of multimodal AI’s response to improve the accuracy of the models. Till now, all the evaluation techniques are available, which are quite structured and traditional to handle specific tasks; they have their restrictions. There is still a need to have a platform to interpret visually how the multimodal AI learns and identifies the pattern of different modalities to understand the edge cases.

Conclusion

Multimodal AI signifies a new milestone in the development of intelligent systems, improving how machines sense, comprehend, and process the world. By combining all the different types of data possible, to make one system that all the modes of data can use—text, images, audio, video, multimodal AI allows us to truly expand how we understand generative AI. The fields in which it is applied, including diagnostics in healthcare, optimization in manufacturing, content creation, and automation in business, are already changing the world.

At Niveus Solutions, we believe in applying advanced technology, such as multimodal AI, to address real business challenges. Our experience in implementing intelligent and AI-based solutions helps enterprise clients deliver increased efficiencies, improve customer experiences, and remain competitive in an ever-evolving digital marketplace. As this technology develops and matures, we will continue to be leaders in the Multimodal Intelligence Evolution to assist organizations in applying and benefiting from Multimodal AI technology. at the challenges of taking multimodal AI systems from experimentation to practice, as well as highlighting the real-world use cases of multimodal AI.