The rapid expansion of the Internet of Things (IoT) and 5G networks are paving new avenues of growth opportunities for businesses. Studies had estimated that by 2023, there would be nearly 30 billion connected devices. This paradigm shift however has generated a tidal wave of data garnered from smart devices. Challenges like excessive latency, bandwidth costs, and legal restrictions on data sovereignty are pain points for businesses as traditional systems struggle to keep pace.

Edge computing provides an alternative to businesses looking for solutions beyond the cloud. This two part blog series delves into the distinctions between edge computing vs cloud computing in GCP, highlights key GCP solutions like Google Distributed Cloud Edge and Vertex AI Edge, and outlines strategies for enterprise edge computing strategy with GCP.

Modernize your enterprise with GCP Edge and Cloud solutions

Edge computing, especially when leveraging Google Cloud Platform (GCP), represents a valuable complementary position to the cloud. With its portfolio of Google Distributed Cloud (Edge and Software Only), Vertex AI Edge, and AlloyDB Omni, GCP allows organizations to ingest, analyze, and act on their data closer to the source while still integrating with cloud services. The benefits of edge computing in GCP include reduced latency, bandwidth conservation, compliance facilitation, and the creation of a single integrated edge and cloud environment to build and ultimately power near-real-time scalable applications.

Understanding the Basics: Centralized Power vs Real-Time Agility

Cloud computing in GCP is a centralized processing architecture in which data is stored and processed in Google’s vast worldwide data centres. This method is best suited for applications that demand massive scalability and can tolerate some delay. BigQuery for large-scale data warehousing, Cloud Storage for scalable object storage, and Compute Engine for virtual machines are among the key services provided. Cloud computing’s power stems from its capacity to perform large, complicated jobs and historical data processing with almost endless resources.

Edge Computing in GCP, on the other hand, allows for reduction in latency and bandwidth usage as workloads are processed near the source of the data. This is crucial for any application that requires real-time decision-making, which GCP enables via Google Distributed Cloud (GDC). It can be deployed either as software or in linked versions hosted on-premises. Anthos provides a consistent management layer across both the cloud environment and the edge environment, making your deployment and management of workloads more consistent. AI at the edge, including TensorFlow Lite, allows devices to do tasks like image recognition in a local environment, without the need to stay continually connected to the cloud.

Edge Computing vs Cloud Computing in GCP – A Technical Comparison

| Parameter | Cloud Computing (GCP) | Edge Computing (GCP) |

| Latency | Moderate to high: Data is sent to a faraway data centre, which might cause delays, rendering it unsuitable for time-sensitive applications. | Ultra-low: Processing occurs near to the data source, frequently within milliseconds, which is crucial for real-time applications. |

| Bandwidth usage | High: Raw data from devices is transmitted across the network to the cloud, which can be costly and bandwidth-intensive. | Low: Data is processed and filtered locally, with only critical, aggregated data being transferred to the cloud. |

| Processing location | Centralized: Google’s vast worldwide data centres serve as a centralised hub. | Decentralized: Distributed at or near the data source, which might be a manufacturing floor, retail shop, or distant equipment. |

| Data compliance | Restricted: Centralised data storage can complicate data residency regulations. Security is strong but dependent on provider policies. | Simplified: Data may be processed and kept locally, which helps to fulfil stringent regulatory and sovereignty requirements. |

| Scalability | Virtually limitless: Resources may be scaled up or down instantaneously and on-demand to suit dynamic fields. | Limited by local resources: Scaling involves adding more physical hardware at the edge, which can be more complex and costly. |

Benefits of Edge Computing in GCP

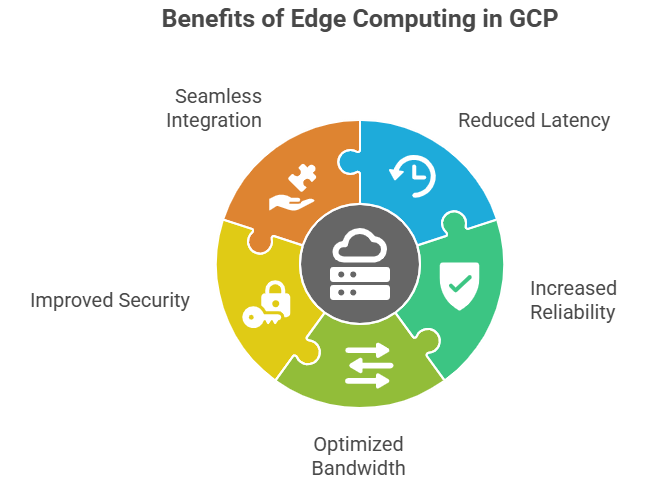

Edge computing in GCP provides particular benefits to organizations working in environments where speed, resilience, and compliance are crucial. By processing data closer to its source, GCP’s edge solutions assist to overcome the limits of standard cloud models. Key benefits include:

- Reduced Latency: Edge computing reduces system response time by processing data on the device, rather than sending it all to a remote cloud data centre. For real-time applications like autonomous driving, factory automation, and live video analytics, processing parallel data streams is critical, as even millisecond delays can lead to catastrophic outcomes.

- Increased Reliability and Availability: By reducing the dependence on persistent cloud connectivity, edge computing helps to reduce downtime. These applications will still remain operational and process data, even if the connection is irregular or disconnected. This decentralised architecture has no single point of failure, ensuring that failure of one edge node will not affect the entire system.

- Optimised Bandwidth and Cost-effectiveness: There is no requirement to send all data to the cloud. Edge computing filters, processes, and analyzes the data near the source so that only the relevant or minimal data travels up to the central cloud for storage. This saves on data transfer cost and preserves bandwidth.

- Improved Security and Data Representation: By processing data locally, organizations minimize exposure to public networks, reducing risks of interception or compromise. For sensitive industries like healthcare and finance, this ensures stronger protection. Local processing also supports compliance with data sovereignty laws, keeping information within regional boundaries while still enabling selective transfer to the cloud for advanced analytics.

- Seamless Integration with Cloud Services: GCP’s edge solutions, including Google Distributed Cloud Edge, integrate seamlessly with its core cloud services. This enables businesses to control distributed edge deployments and cloud workloads from a unified platform. You may use strong connected cloud services AI/ML, analytics, storage to take care of the filtered data that is being sent from edge points, and still enjoy the real-time processing at the edge.

Fig 1: Benefits of edge computing in GCP

Enterprise Edge Computing Strategy with GCP

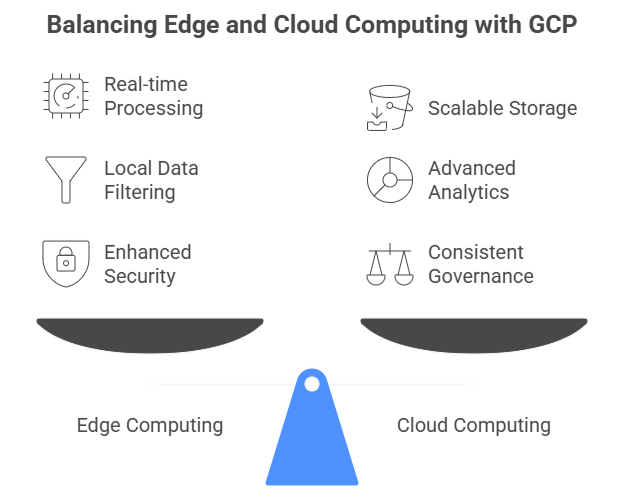

Any robust enterprise edge computing strategy with GCP combines local processing against the sheer scale and intelligence of the cloud. With GCP, businesses are able to create a consistent edge to the cloud continuum that optimizes performance, compliance, as well as innovation. The main features of this strategy are:

- Identifying Workloads That Belong at the Edge: All workloads don’t need to be processed at the edge. Latency and survivability dependent real-time local processing applications, including IoT analytics, retail and points of sale, and manufacturing operations are the most common use cases that benefit.

- Hybrid Workload Orchestration using Google Distributed Cloud (GDC) Software: GDC provides a unified console that enables you to manage applications in the cloud, on-premises, and edge environments. This offers consistency in operations and policy enforcement, and scalability no matter where your applications run.

- Local to Cloud Analytics: Edge appliances enable real-time data filtering and analysis so that insights are not delayed. The processed data can then be transmitted securely to the cloud for storage at scale, advanced analytics at scale with BigQuery and returning of AI models using Vertex AI.

- Security, Governance, and Monitoring for Distributed Workloads: GCP improves edge exploits considerably with enterprise-grade security controls, compliance frameworks, and monitoring. This enables locally held workloads to have the same governance, resiliency, and observability as workload hosting cloud-based workloads.

Fig 2: Balancing Edge and Cloud Computing in GCP with real-time processing, security, storage, and analytics.

GCP Edge Computing Solutions

GCP edge computing solutions like Google Distributed Cloud Edge provide enterprises with cloud-grade services close to where data is generated.

GDC, as a fully managed solution for edge and on-premises deployments, combines hardware, software, platform, and applications into a single unified platform to enable cloud-like operations at the edge. This setup enables organizations to operate current workloads with scalability, consistency, and security equivalent to Google Cloud, but at a closer proximity to the data source.

Deployment Models

- Connected – Managed via the Google Cloud control plane for seamless hybrid operations.

- Software-Only – Deployed on customer-provided hardware while still retaining GDC’s cloud-first capabilities.

Key Capabilities

- Appliance-Based Deployment – Google-managed hardware and software for predictable performance.

- Full Stack for Edge Workloads:

- Compute – Virtual Machines and Google Kubernetes Engine (GKE)

- Database – AlloyDB Omni for edge-optimized data management

- AI/ML – Vertex AI at the edge for real-time inference

- Secure Connectivity – Integration with Cloud WAN ensures reliable, secure communication between edge and cloud.

AI/ML – Vertex AI at the Edge for Real-Time Inference

Running AI/ML workloads at the edge using Vertex AI enables organizations to perform real-time inference directly where data is generated. This offers several advantages:

- Ultra-Low Latency: Eliminates round-trip delays to the cloud, enabling faster decision-making for time-critical use cases such as predictive maintenance, quality control, and anomaly detection.

- Improved Data Governance & Compliance: Sensitive data can remain within defined geographic or organizational boundaries, supporting regulatory compliance (like GDPR) and reducing risks associated with data transfer.

- Enhanced Security Through Model Isolation: Deploying models locally ensures that both data and model artifacts stay isolated from external environments, protecting proprietary algorithms and reducing attack surfaces.

- Bandwidth Efficiency: Minimizes the amount of data transmitted to the cloud, reducing network costs and improving performance in bandwidth-constrained environments.

This approach is especially beneficial for organizations that prioritize data sovereignty, need to meet strict regulatory requirements, or want to maintain tighter control over their AI models and data assets.

Security at the Edge

GDC is designed with enterprise-grade security from the ground up.

- Supply Chain Security – Google controls manufacturing and software delivery.

- Zero-Trust Architecture: Security standards are applied locally and uniformly across environments.

- Hardware Root of Trust – Features such as secure boot, firmware validation, and tamper detection safeguard devices at all levels.

- Compliance Consistency – Uniform governance and monitoring across edge and cloud deployments.

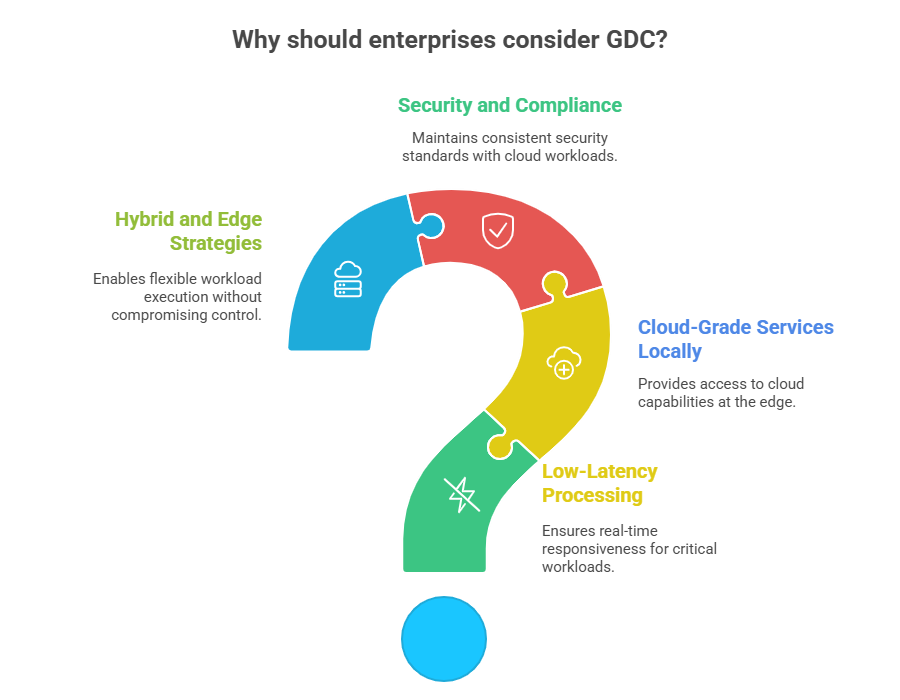

Why GDC Matters for Enterprises

- Low-Latency Processing – Delivers real-time responsiveness for mission-critical workloads.

- Cloud-Grade Services Locally – Access to GCP’s compute, database, and AI capabilities without leaving the edge.

- Security and Compliance – Follows the same rules as cloud workloads.

- Hybrid and edge-first strategies – enable companies to execute workloads where they perform best, without compromising control or consistency.

Figure 3: Why GDC Matters for Enterprises

Conclusion

Edge computing and cloud computing are not competing technologies, but rather complimentary components of a modern and integrated strategy. Cloud computing on GCP offers unrivalled scalability, centralised processing, and long-term data analysis, all of which are required for large-scale data warehouses, complicated machine learning model training, and worldwide applications. It’s where you gain deep insights, train your AI, and manage your entire system.

The actual benefit comes from a hybrid strategy: using Google Distributed Cloud Edge for in-region, low-latency processing, and Google Cloud’s extensive services for heavy-computation computing and long-term analysis. In Part 2 of our blog, we take a closer look at when to choose edge versus cloud in GCP, explore hybrid approaches, highlight real-world use cases across industries, and share Niveus best practices to help enterprises design resilient, future-ready architectures.