With over 75% of organizations currently testing Generative AI across different functions, AI in the workplace is reshaping how businesses operate. However, this transformation brings complex challenges around employee well-being, data protection, and ethical adoption, as AI innovation continues to outpace regulation. In this blog, we look at how organizations can offer a safe, compliant, and human-centered foundation for Generative AI.

Build safe, human-centered AI workplaces

In the new era of innovation, companies must prioritize safe technology use, clear data, privacy, and AI ethics policies. A culture of constant learning allows employees to adapt, allowing GenAI to improve productivity. Aligning strategy, technology, and human well-being can help organizations maximize potential while protecting core values.

The Balancing Act: Building Responsible AI Workplaces

As AI in the workplace continues to integrate into the fabric of modern businesses, success depends on striking a balance between innovation and accountability. The path to GenAI exists at the intersection of four interconnected pillars: employee wellness, security, compliance, and adoption. Each pillar is critical to ensuring technology helps people, enhances processes, and builds trust.

1. Employee Wellness – Putting People Front and Center

Integrating AI in the workplace can be a big challenge for organizations as it’s more about people than technology. Inconsiderate adaptation of AI has been shown to produce stress and uncertainty for employees who often face different skill requirements as their jobs change in nature. In fact, a survey found 68% of workers are now experiencing anxiety about AI’s impact on their jobs, and 45% of employees feel they are not equipped to engage in AI workflows.

- Job Satisfaction & Security: AI disruption in jobs is fast-tracking job loss and job anxiety. Older employees are being impacted at a greater rate than younger employees. A survey of 57% of respondents indicated that job anxiety about AI replacing jobs has negatively impacted their job engagement and productivity.

- Mental Health: Effects from the introduction of AI can worsen employee stress and burnout, which can be an economic loss related to mental health in India. Over 80% of white-collar employees report experiencing adverse mental health symptoms that impact employee well-being and performance at work.

- Work-Life Balance: AI can improve employee flexibility by eliminating repetitive tasks; however, it also encourages an “always on” work culture. Structured work cultures lead to 30% higher employee satisfaction when balancing work and personal lives.

2. Enforcing Regulatory Compliance in AI

AI in the workplace adoption brings unique regulatory challenges that demand strategic planning. Rapid AI innovation often outpaces regulations, leaving organizations vulnerable to legal, operational, and reputational risks.

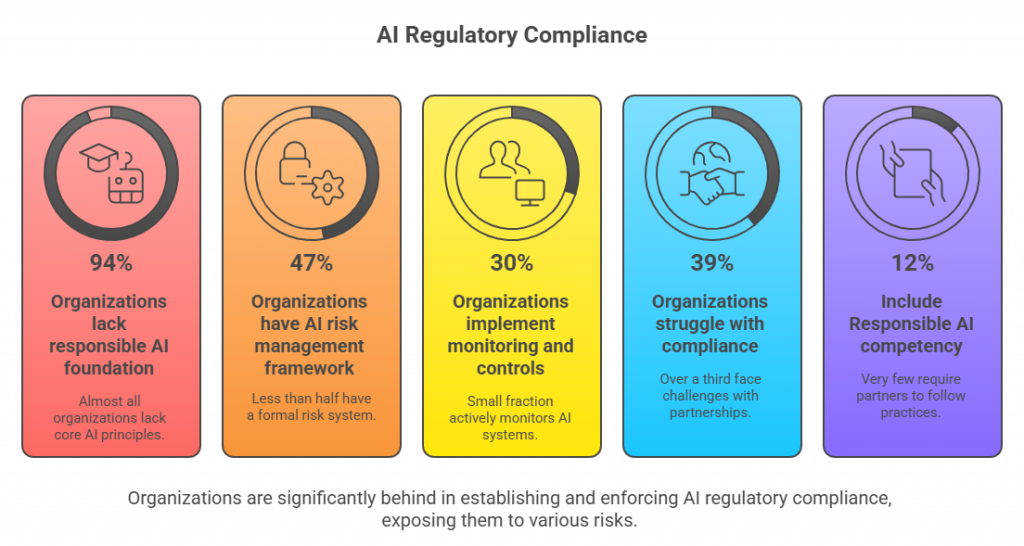

- 94% of organizations lack a responsible AI foundation. This means almost all organizations have not yet established core principles or policies for developing and using AI responsibly. Without this foundation, AI projects can pose ethical, legal, or reputational risks.

- Only 47% have an AI risk management framework, and less than half of organizations have a formal system to identify, assess, and mitigate AI-related risks. This leaves many AI initiatives vulnerable to errors, bias, or unintended consequences.

- Merely 30% implement monitoring and controls to mitigate AI risks. Only a small fraction actively monitors AI systems in real time or applies controls to prevent negative outcomes. Even if risks are known, they may not be effectively managed.

- 39% struggle with compliance due to partnerships. Over a third of organizations face challenges ensuring AI compliance when working with third-party vendors or partners, highlighting that responsibility doesn’t end within the organization; it extends across collaborations.

- Only 12% of third-party contracts include Responsible AI competency. Very few organizations require partners to follow Responsible AI practices, which can expose them to risk if a vendor’s AI systems are biased, unsafe, or non-compliant with regulations.

Figure 1: Visual representation of organizations enforcing regulatory compliance in AI

3. Mitigating Cybersecurity Risks in AI

AI integration enhances efficiency but also increases cyberattack risks, necessitating robust security measures like data encryption and access controls to protect against potential financial, legal, and reputational damage. Let’s take a look at some of the challenges businesses face in integrating AI:

- Complexity and Interconnectivity: AI systems are highly interconnected, forming a network of applications, data sources, and devices, increasing potential vulnerabilities and requiring robust system design and monitoring.

- Data Sensitivity: AI systems process vast amounts of sensitive data, making them attractive to cyber criminals, highlighting the need for stringent data protection measures.

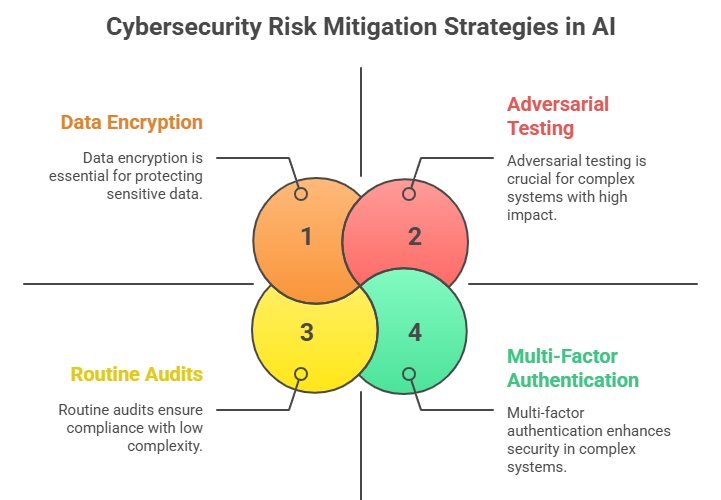

- Building Robust Security Frameworks: A strong security posture begins with implementing comprehensive security frameworks. This includes regularly updating AI systems and infrastructure to patch known vulnerabilities, conducting routine audits, and ensuring adherence to cybersecurity best practices.

- Addressing AI-Specific Vulnerabilities: AI poses risks like adversarial attacks, which can manipulate input data, leading to incorrect outputs, operational disruptions, or critical failures, such as healthcare diagnoses or financial system decisions.

- Data Encryption and Access Controls: Encryption, access controls, multi-factor authentication, and role-based access control are essential for protecting sensitive information and ensuring authorized personnel can only interact with critical data and AI systems.

- Adversarial Testing and AI Model Security: Adversarial testing simulates attacks on AI systems to identify weaknesses and improve resilience, while robust training strengthens systems against manipulation and maintains reliability under malicious attempts.

Figure 2: Visual illustration of cybersecurity risk mitigation strategies in AI

4. Overcoming AI Adoption Barriers

AI in the workplace faces challenges like job displacement, cultural pushback, and data privacy concerns. To overcome these, organizations should prioritize openness, inclusive engagement, phased-in adoption, robust support, and strong leadership to accelerate AI use and maximize benefits.

Common Resistance Factors

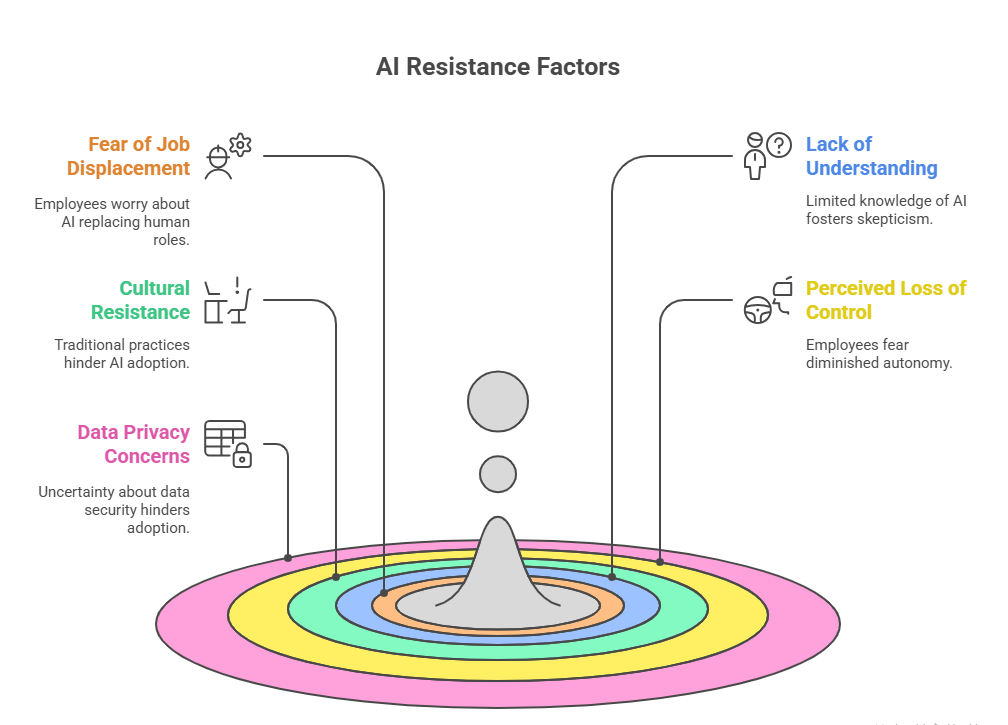

- Fear of Job Displacement: Many employees worry that AI will replace human jobs. This fear can trigger resistance to adopting new technologies, slowing down implementation and reducing engagement.

- Lack of Understanding: A limited understanding of AI capabilities and benefits can foster skepticism. Employees may perceive AI as overly complex or irrelevant to their roles, leading to hesitation or pushback.

- Cultural Resistance: An organizational culture that favors traditional practices can be a significant barrier. Long-standing workflows and reluctance to change make it challenging to introduce AI effectively.

- Perceived Loss of Control: Employees may fear that AI systems will diminish their autonomy, affecting how they perform their work. This perceived loss of control can lead to resistance.

- Concerns About Data Privacy and Security: Uncertainty about how AI handles sensitive data can hinder adoption. Employees and stakeholders need reassurance about the security measures protecting their information.

Figure 3: Visual illustration of AI resistance factors

Strategies to Overcome Resistance

- Transparent Communication: Clearly articulating AI’s benefits and its impact on roles and processes builds trust. Regular updates, open forums, and Q&A sessions can demystify AI and alleviate fears.

- Inclusive Involvement: Involving employees in pilot projects and seeking their feedback fosters ownership and reduces resistance. When employees feel integral to the process, they are more likely to embrace AI.

- Gradual Implementation: Introducing AI incrementally rather than all at once allows employees to adapt at a comfortable pace. Phased adoption reduces anxiety and builds confidence in the technology.

- Support Systems: Training programs, help desks, and continuous learning opportunities ensure employees feel equipped to work with AI tools effectively. Support structures encourage adoption and smooth transitions.

- Leadership Support and Advocacy: Visible and active support from leadership sets the tone for the organization. Leaders who champion AI initiatives and demonstrate tangible benefits inspire confidence and motivate adoption across teams.

The Niveus Approach

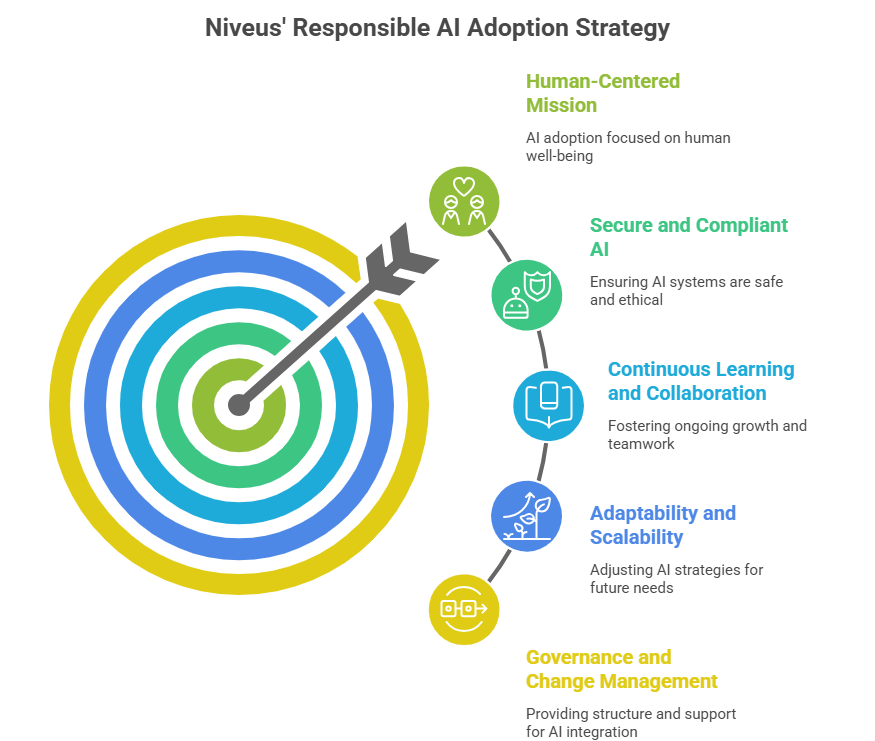

At Niveus Solutions, responsible AI adoption is more than a technical challenge; it’s a human-centered mission. By combining technical innovation with empathy, Niveus helps organizations implement GenAI in a secure, compliant, and employee-focused manner. Their approach emphasizes continuous learning, collaboration, and adaptability, ensuring AI initiatives are scaled thoughtfully while maintaining trust, well-being, and operational integrity. Through tailored frameworks, clear governance, and change management support, Niveus enables enterprises to harness AI as a growth engine that amplifies both business outcomes and human potential.

Figure 4: Niveus’ responsible AI adoption strategy

Safeguarding AI Systems: Security Beyond Automation

Niveus’ whitepaper explores how enterprises can strengthen their digital defense as AI in the workplace expands their data ecosystem. With interconnected systems and AI-driven automation introducing new vulnerabilities, cybersecurity becomes foundational to responsible innovation.

What’s Inside:

- Key cybersecurity risks unique to AI systems

- Real-world best practices from finance, healthcare, and manufacturing sectors

- Frameworks for encryption, access control, and adversarial testing

- Strategies for proactive monitoring and incident response

Download our whitepaper to explore how to build secure, compliant, and human-centric AI workplaces.

Conclusion

Generative AI is transforming workplaces, but success lies in people. Human-centric AI workplaces balance well-being, ethical standards, and adaptability. By designing empathetic, secure, and inclusive AI strategies, organizations can harness disruption to enhance human potential, not replace it.

Our whitepaper, AI in the Workplace: Balancing Employee Wellness, Compliance, Security, and Adoption, dives deeper into how organizations can prioritize employee wellness, enforce AI ethics and compliance, strengthen cybersecurity, and drive adoption to create responsible, resilient, and people-first AI environments.