In an era where Artificial Intelligence (AI) is rapidly revolutionizing sectors and daily lives, with over 90% (studies) of companies now exploring or implementing AI solutions, its pervasive influence is reshaping every sector. AI models, often labeled as “black boxes,” are increasingly used in businesses for critical decisions such as medical diagnoses and financial approvals. The demand for understanding why these decisions are made is surging. In this blog, we’ll delve into the critical field of Explainable AI (XAI) exploring its core methodologies, key benefits, and its essential role in building AI systems that are powerful, open, trustworthy, and accountable.

Unlock AI’s true potential. Learn more about XAI

The increasing opacity of AI systems has created a substantial business case for Explainable AI (XAI). XAI goes beyond seeing inside the algorithms, it tries to create a connection between complex AI logic, and human understanding. AI decisions can be evaluated for fairness and bias, debugged for unusual or incorrect recommendations, and trusted for critical decisions. This is pivotal for businesses in stringent fields, especially in high-consequence applications like healthcare, finance, and autonomous systems, where mistakes can have severe consequences. This could result in detrimental challenges in such fields.

What is Explainable AI (XAI)

Explainable AI is an arising field that seeks to describe the behaviour of AI models in comprehensible terms. Explainable AI (XAI) is a vital issue that focuses on offering tools for human users to understand, analyse, and ultimately trust the outcomes of machine learning algorithms. By reducing the opacity of advanced models, explainable AI (XAI) techniques empower stakeholders with insights into the reasoning behind AI decisions.

In contrast to black-box systems, explainable AI (XAI) models promote greater understanding and oversight, making them essential for sensitive applications where trust, fairness, and compliance are critical. As conversations around explainable AI vs responsible AI grow, XAI emerges as the foundation for ensuring that intelligent systems behave transparently and are held accountable.

Core Concepts:

- Interpretability: It refers to how well a human can understand the reasoning behind a model’s decision or prediction. An interpretable model helps users grasp the relationships in the data and the logic behind its outputs, enabling them to make sense of why the model behaved a certain way.

- Transparency: In AI, this means providing clear visibility into a model’s inner workings, including its design, parameters, and data processing methods. Transparency focuses on exposing the system’s mechanics, rather than just its outcomes, so stakeholders can inspect and verify how it operates.

- Trustworthiness: Trust is an essential component of any technology adoption, particularly AI in commercial situations. Explainable AI increases confidence by demystifying AI processes. Based on studies by experts, XAI has shown to help make AI systems more transparent and less mysterious. It sends the message to customers that AI may be flashy technology, but it is also a reliable decision maker.

Figure 1: Explainable AI (XAI) Cycle

Black-Box versus White-Box Models:

White-Box Models (Models which are inherently interpretable): These AI models are basic and simple to grasp. While interpretable, they may not always have the ability to predict complex model outcomes. Examples include Decision Trees, Linear Regression, and Rule-Based Systems.

Black-Box Models (Opaque Models): These are challenging artificial intelligence systems whose internal decision-making mechanism is difficult to identify. They generally attain excellent accuracy at the expense of transparency. Examples include Deep Neural Networks (DNNs) and Ensemble Models. XAI largely explains these black-box models.

Figure 2: Black-Box versus White-Box Models

How Explainable AI Works: Core Methodologies

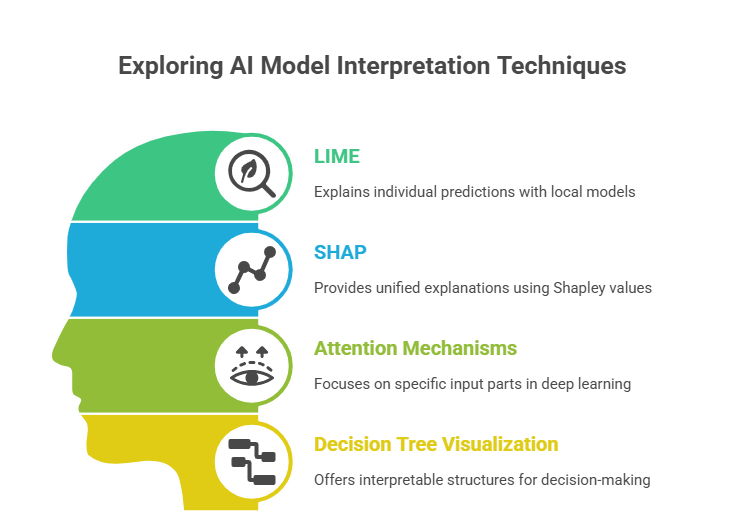

Understanding how explainable AI (XAI) delivers its insights is crucial. Explainable AI (XAI) techniques generally fall into two broad categories: Model-Agnostic Techniques and Model-Specific Techniques. Explanations can also be categorized by their scope: Local or Global.

Overview of Approaches:

- Model-Agnostic Techniques: These are among the most popular XAI approaches, with LIME and SHAP being widely adopted. Indeed, LIME and SHAP are used by nearly 70% of studies for AI model interpretation in fields like Alzheimer’s disease prediction (PubMed Central, 2024).

- LIME (Local Interpretable Model-agnostic Explanations):

- Concept: Explains individual predictions by building a simpler, local model that approximates the black-box model’s behavior around that specific prediction.

- Mechanism: Generates perturbed versions of the input data and observes how the black-box model’s prediction changes. A simple model is then trained on these perturbed data points.

- Output: Feature significance scores, which emphasise the most significant aspects of the input for that particular instance.

- SHAP (SHapley Additive exPlanations):

- Concept: Provides a unified, theoretically sound approach to explain individual predictions by assigning an “importance value” to each feature, based on cooperative game theory.

- Mechanism: Calculates Shapley values, representing the average marginal contribution of a feature across all possible feature combinations.

- Output: Consistent and locally accurate attributions, showing how each feature pushes the prediction from a baseline. Visualized through “waterfall” or “summary” plots.

- LIME (Local Interpretable Model-agnostic Explanations):

- Model-Specific Techniques: These methods leverage the inherent design of certain AI models.

- Attention Mechanisms (in Deep Learning):

- Concept: Allows deep learning models, especially those handling sequences (NLP, computer vision), to “focus” on specific parts of the input when making a prediction.

- Mechanism: An attention layer distributes weights to various input components; higher weights imply more attentiveness.

- Output: Visualized as heatmaps over text or images, directly showing salient regions that influenced the model’s decision.

- Decision Tree Visualization:

- Concept: Models based on decision trees have an interpretable structure by design.

- Mechanism: A decision tree defines in detail a collection of conditions or rules that yield a prediction.

- Output: A flowchart may be used to describe the whole decision-making process, and the path from input characteristics to expected conclusion should be clear.

- Attention Mechanisms (in Deep Learning):

Figure 3: Exploring AI Model interpretation technique

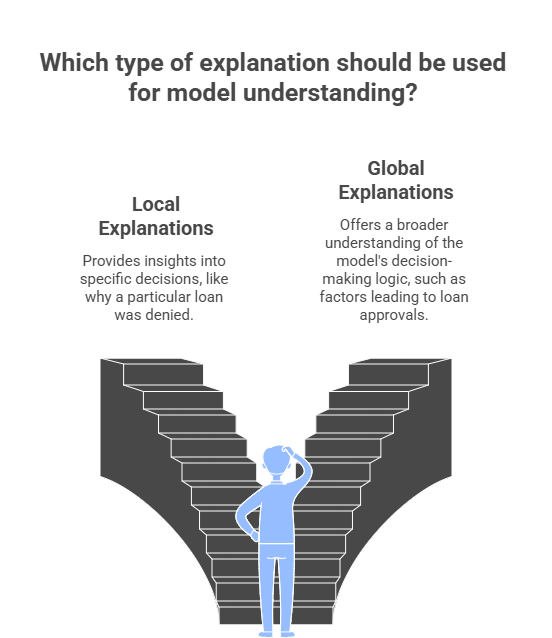

- Local vs. Global Explanations:

- Local Explanations: Explain why a particular, specific forecast was made. example, describe the grounds behind a particular loan denial.

- Global Explanations: Aim to understand the overall behavior or decision-making logic of the entire model. This helps answer general questions like which factors typically lead to loan approvals. While challenging for complex black-box models, aggregated SHAP plots can offer global insights.

- Local Explanations: Explain why a particular, specific forecast was made. example, describe the grounds behind a particular loan denial.

Figure 4: Local vs. Global Explanations

Key Components of an XAI System

- Prediction Accuracy: Prediction accuracy is important for machine learning and data scientist professionals as it determines the success of a model. Accuracy is typically determined by learning data patterns and checking the model’s accuracy in testing sets. In explainable AI (xai), accuracy remains constant, but performance can be understood through explainable AI (XAI) models like LIME and multiple simulations. LIME helps explain AI model predictions at the local level.

- Interpretability: Also known as Traceability, is a crucial factor in building models. It involves visualizing attributes in data, determining their correlation, and removing irrelevant columns. In machine learning or AI models, interpretability involves breaking down learning into chunks, such as decision tree rules and important features. Deeplift, a framework, contributes to interpretability by analysing input feature changes and explaining predictions. This method divides the learning process into smaller parts, allowing for more effective problem solving and higher model performance. These are examples of widely used explainable AI (XAI) techniques.

- Justifiability: The focus is on addressing human mistrust in AI or ML models. A decision tree predicts a student’s chances of gaining admission into a tech program based on their marks. A student with 85% marks in computer science can easily get a CSE, while 80% marks with excellent extracurricular skills can lead to a BTech program. Understanding the factors influencing results and adjusting input and output is crucial for achieving justifiability in AI and ML models. This aligns closely with broader discussions around explainable AI vs responsible AI, where justifiability supports trust, while responsibility includes fairness and ethical governance.

Real-World Use Cases of Explainable AI

Explainable AI (xai) is more than just a research question. It is increasingly becoming a necessary practice in various industries to deploy AI more responsibly, efficiently, and trustworthily. Some important real-world use-cases are:

- Healthcare: In healthcare the use case is to aid physical examination of an AI diagnosis (for instance, displaying regions on tumor in medical images for validation) and by presenting relevant patient data that has impact over treatment plans, XAI can lay groundwork for building trust in critical decisions.

- Financial Services: XAI is critical for transparency and compliance since it offers explicit reasoning for loan approvals/denials (for example, credit score criteria) and explains why transactions are highlighted as suspicious in fraud detection, hence promoting compliance and consumer trust.

- Autonomous Systems: A must for security, XAI elucidates why autonomous vehicles suddenly make path changes (e.g., obstacle detection) to catch bugs in advance & boost reliability, as well as build public trust.

- Criminal Justice: XAI can justify risk assessments (e.g., parole decisions) and highlight the factors that may face scrutiny for bias in criminal justice, thus helping to ensure fairness and monitor use of AI in legal contexts.

- Manufacturing and Quality Control: XAI helps engineers learn why AI vision systems flag certain products as defects, pinpointing what anomalies are detected to aid in the optimization of production processes and quality enhancement.

How Niveus Utilizes Explainable AI (XAI)

At Niveus, we believe that trust is the foundation of responsible AI adoption. That’s why Explainable AI (XAI) is integrated into the way we design, build, and deploy AI solutions across industries.

- Model Interpretability from Day One: Our AI/ML pipelines can incorporate interpretability frameworks like SHAP, LIME, and counterfactual explanations during development, not just after deployment. This enables our teams and clients to understand what’s influencing predictions, helping spot bias early and improving model trustworthiness.

- Human-Centric Dashboards: Niveus builds custom model explainability dashboards that visualize AI decision logic in a business-friendly way. These help non-technical users, including compliance teams and domain experts, confidently assess model behavior.

- Regulatory Compliance & Audit Readiness: For clients in finance, insurance, and healthcare, we use XAI to ensure AI models comply with GDPR, RBI, and other regulatory standards. Every AI decision can be traced, justified, and documented essential for audits and risk management.

Conclusion

According to research, 78% of organisations feel it’s crucial to trust that their AI’s output is fair, safe, and dependable. This highlights the rising desire for transparency in AI systems. It promotes human monitoring, ethical compliance, and trust. Explainable AI (XAI) is an evolving field, constantly evolving to bridge the gap between machine intelligence and human understanding. As AI use becomes more responsible, adopting explainability is critical.