According to recent data, about 34% of businesses globally are actively implementing AI, with India leading the way with a 59% adoption rate, followed closely by the United Arab Emirates at 58%, Singapore at 53%, and the US at around 33%. Organizations use artificial intelligence (AI) to improve managerial and customer relations as the world shifts towards utilizing data in organizational decision-making. This blog will explore the essentials of Google Cloud AI deployment, best practices for AI App Deployment on Cloud Run, and how it enhances interaction, along with data transformation.

As learning algorithms and AI systems become more complex and sophisticated, companies and businesses seek stable and efficient platforms for implementing these models. Google Cloud AI deployment is a powerful solution with a comprehensive array of tools that power the entire AI lifecycle, from data preparation and model training to deployment and real-time inference.

Transform your organization with Google Cloud AI

Effective deployment strategies are essential to achieve maximum benefits in AI applications for the organization. They help in addressing critical challenges such as handling large-scale datasets, optimizing model performances, and ensuring that the workloads are adaptable to changes that happen due to the dynamic nature of AI. Cloud Run plays a crucial role as a serverless solution of Google Cloud. Implementing AI applications with Cloud Run helps businesses deploy AI models and scale while focusing less on infrastructure management.

Understanding Google Cloud AI Deployment

AI application deployment is taking a trained artificial intelligence model and integrating it into the live system which makes the prediction or functionalities available for the users within a realistic application, essentially allowing the AI to carry out real-world tasks, through its active use within a specific environment. Google Cloud AI deployment is more integrated for helping organizations integrate machine learning models into their existing workflows.

Google Cloud allows firms to choose the services they think would best suit them. Whether deploying generative AI models or classic machine learning algorithms, Google Cloud has a robust ecosystem available for multiple use cases.

Core Stages of Google Cloud AI Deployment

- Data Preparation (Data Preprocessing & Transformation): Data preparation is the process of collecting, cleaning, and converting raw data into a structured format ready for model training. It comprises data extraction, cleaning, normalization, feature engineering, and transformation that ultimately ensures model accuracy and performance.

Google Cloud Services for Data Preparation:

- BigQuery: It stores and processes large quantities of structured and semi-structured data.

- Cloud Dataflow – Processes the batch and streaming data for transformation and feature engineering.

- Dataprep: Provides an AI-driven, no-code tool that quickly and easily prepares data sets for use.

A well-prepared dataset essentially eliminates noise, and irregularities, and ensures a machine learning model learns from high-quality, pertinent, and unbiased data- which translates to better predictions.

- Model Training: Training is the process where prepared data is fed into a machine-learning algorithm by which it learns patterns and relationships among them. Model architecture selection, hyperparameter tuning, and deployment of computational resources such as GPUs and TPUs for optimal performance would be involved in the process.

Google Cloud Services for Model Training:

- Vertex AI Training – Managed service that allows training custom models by AutoML or custom frameworks like TensorFlow and PyTorch.

- TPUs (Tensor Processing Units): Specialized hardware accelerators used for training deep learning models.

- AI Platform Training (Now a part of Vertex AI): Provides large-scale distributed training for custom AI models.

Training will determine the generalizability of unseen data in the model. Using optimized cloud resources ensures faster training times and improved model accuracy while reducing costs.

- Model Deployment (Serving and Inference): Model deployment is the set of procedures through which an AI model, having trained on historical data, is made available for real-world applications. This mainly entails serving predictions via APIs, incorporating the model into business applications, and ensuring scalability to handle user requests efficiently.

Relevant Google Cloud Services for Model Deployment:

- Cloud Run: Employs a serverless compute platform that enables AI applications and inference models to be deployed as containerized applications.

- Vertex AI Endpoints: This service provides complete management for deploying and serving AI models with automatic scaling.

- Cloud Functions: An event-driven lightweight deployment enabling inference models to run whenever events occur.

Deployment of models enables AI applications to deliver predictions either in real-time or in batch mode and that too at a high level of uptime and scalability. Cloud Run for AI applications lets a business deal with dynamic workloads without any management of infrastructure.

Image 1: Critical Components of Google Cloud AI Deployment

Critical Components of Google Cloud AI Deployment

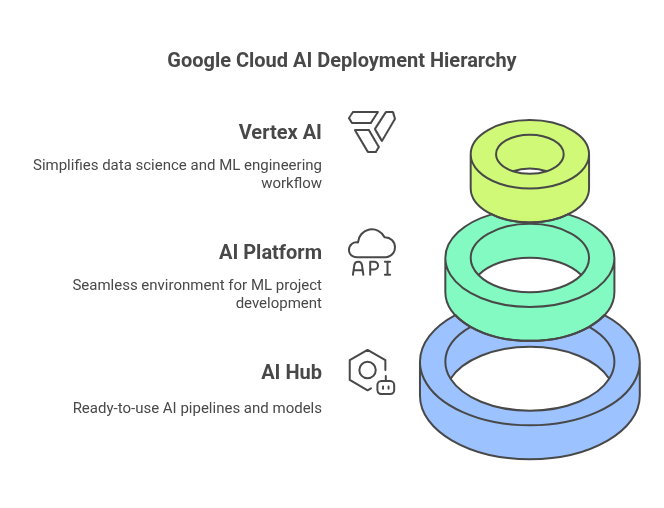

The following components serve critical roles in Google Cloud AI deployment as a comprehensive ecosystem propelling the entire machine-learning lifecycle. From data processing and model training to deployment and scaling, here is how each component fits into the Google Cloud AI Deployment strategy:

- Vertex AI’s End-to-End ML Workflow Integration: It generally simplifies the workflow of data science, data engineering, and ML engineering. It allows AI models to be developed, trained, and deployed while being integrated with AutoML, Model Garden, and Generative AI tools. This enables businesses to build and scale AI applications efficiently using pre-built models, custom training options, and fully managed infrastructure.

- AI Platform – ML Project Development and Deployment: The platform provides a seamless collaborative environment for data scientists, machine learning engineers, and data engineers, prioritizing an easy transition of a model through its development to application in the real world. It enables users to move smoothly through the entire lifecycle of an ML project from ideation and experimentation to deployment in production. This is a key component for organizations that want to produce AI models with minimal friction.

- AI Hub – Pre-Built AI Components for Fast Deployment: AI Hub is a hosting repository of AI components, including ready-to-use AI pipelines, ML algorithms, and pre-trained models. This means that businesses can now speed up their AI deployment by leveraging pre-built solutions instead of developing models from scratch. It’s especially convenient for organizations looking to provide off-the-shelf AI solutions with minimal changes.

Benefits of Cloud Run for Scalable and Efficient AI Deployment

Cloud Run seamlessly integrates with Google Cloud AI services to allow businesses to deploy and scale AI models effectively without any server management. It is well-suited for containerized AI workloads and deploying inference models, handling API requests, serving to predict in real-time, and automatic scaling. You pay for Cloud Run only when the app is running, so you can save operating costs while being hands-free even in high-volume situations. Whether you’re deploying a chatbot, recommendation engine, or generative AI model, then Cloud Run provides a flexible, serverless environment to help accelerate AI-based innovation.

Aside from easy deployment, Cloud Run offers numerous advantages, such as automatic scaling, cost efficiency, and simple integration with other Google Cloud tools, that make it ideal for scalable AI services.

- Automatic Scaling: The automated scalability built into Google Cloud Run allows your AI application to handle fluctuations in demand. This means that your AI models will handle extra workloads during peak traffic times and minimal workloads during low traffic, without any manual tinkering or complex knob twisting. The elasticity ensures both availability and performance to varying workloads; this is perfect for AI services that see varying or uncertain usage patterns.

- Cost Efficiency: With the pay-as-you-go pricing option of Cloud Run, businesses will be charged only for the actual resources consumed while handling requests. This allows organizations to optimize cloud spending since resources can be scaled down to zero when idle, thus saving costs compared to traditional server-based approaches. Flexibility and the ability to change resources depending on actual demand will enable companies to run more cost-effectively and with less impact on the reliability and responsiveness of AI services.

- Simplified Deployment Process: Running AI applications on Cloud Run is fast and relatively easy with very limited requirements. Developers can easily push their containerized AI models directly from their local development environment or tie them into CI/CD pipelines for automated deployments. The streamlined process allows teams to focus on building and optimizing their AI solutions rather than navigating complex infrastructure management or manual deployment configurations.

- Integration with Other Google Services: Cloud Run seamlessly integrates with other Google Cloud services like BigQuery for data insights at scale and Pub/Sub for event-driven architecture. These integrations can be used to build end-to-end, data-powered AI applications by integrating Cloud Run with tools for data processing, analytics, and messaging. The combination of services encourages the development of scalable, end-to-end AI solutions that enrich business insights and decision-making abilities.

Steps for Deploying Generative AI Models on Cloud Run

Generative AI has recently become significantly popular, with applications that help generate new content with learned patterns based on existing data. Deploying generative AI models offers unique challenges but can easily be managed within Google Cloud’s infrastructure and services provided by Cloud Run.

- Model Development: The process begins by developing a generative model with either TensorFlow or other machine learning frameworks. Ensure that the model obtains high-quality training relevant to your application.

- Containerization: Once the model is trained, the Docker container wraps it with all its dependencies to ensure consistent application performance across different environments.

- Deployment: To deploy Cloud Run, this container image is pushed into the Google Container Registry. From here, you take it into Cloud Run and set the memory allocations and other concurrency limits based on your application’s requirements.

- Monitoring and Optimization: Once you have run the deployment, use Google monitoring tools to monitor the performance of the application. Collect observations and provide value-added improvements needed for maximizing efficacy and efficiency.

Data Transformation Strategies for Effective Deployment

For the successful deployment of AI, it is crucial to have an established structured approach for data transformation. By following best practices such as cleaning, normalizing, and conducting feature engineering on the data, you can maximize the model’s accuracy and the performance of the application. In addition, timely updating of the dataset and splitting the data into training, validation, and testing subsets ensure that the model remains relevant and flexible. These data transformation strategies are the linchpin for successful deployment and the long-term efficiency of AI solutions.

Best Practices for Data Transformation include:

- Data cleaning: Remove duplicate values, fill missing values in the appropriate places, and correct inconsistencies in a dataset before training the model.

- Normalization: Scale the numerical features so that they contribute equally during model training. Standard techniques include min-max scaling or z-score normalization.

- Feature Engineering: Generate novel features from the existing data that might provide new insights or improve the model’s performance.

- Split data: Split the dataset for training, validation, and tests to evaluate performance before implementing the model.

- Continuous Data Updates: Have a strategy for frequently updating your dataset with fresh information to keep your models relevant over time.

Case Study: Niveus Solutions and Cloud Run

Niveus Solutions has successfully worked towards deploying scalable AI services on Cloud Run for its clients. Here are some of our case studies

- Healthcare Cloud Solutions With GCP: Using Cloud Run, this application was built to analyze patient records and feedback on treatment options from historical data. It scaled automatically to accommodate the surge in patient flow during peak hours, thus proving Cloud Run to be a sound choice in AI applications.

- E-commerce App Development – Beauty & Wellness Platform: Niveus Solutions assisted an e-commerce client in deploying generative AI software that tailored marketing content based on customer patterns. They made sure the application was capable of fetching hundreds of distant APIs during promotional events, impeding downtime or performance deterioration with the help of Cloud Run capabilities.

Conclusion

In conclusion, Google Cloud AI deployment offers organizations an appropriate platform to efficiently develop, train, and deploy AI models. By using services like Cloud Run, Vertex AI, and AI Hub, businesses automate the entire machine learning lifecycle and realize the full potential of AI-driven solutions. Google Cloud provides flexible, cost-reduction infrastructure solutions along with seamless integration capabilities for businesses that desire to deploy high-performance AI applications to enable innovation and operational efficiency in today’s competitive environment.

Additionally, Niveus Solutions uses the powerful toolset of Google Cloud to help its clients build scalable AI applications. With its great expertise in delivering practical solutions across industries, Niveus Solutions guarantees that businesses can harness the full potential of AI, driving performance and growth. esses in AI-operated innovation to stay ahead in a competitive market.